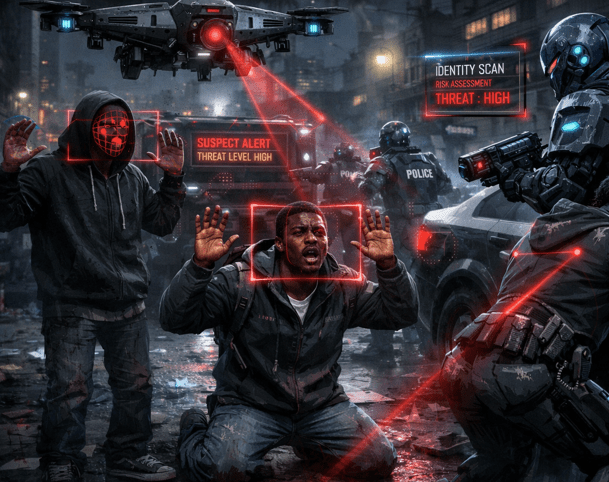

Artificial intelligence (AI) and machine learning systems are increasingly used in U.S. law enforcement through tools like predictive policing algorithms, risk assessment software, and computer vision/facial recognition technology. While proponents claim that these systems improve efficiency and objectivity in fighting crime, a growing body of research, civil-rights advocacy, and policy analysis shows that rather than eliminating bias, AI tools often encode and amplify existing racism in policing, disproportionately impacting Black communities.

1. The Roots of Algorithmic Bias: Dirty Data and Historical Over-Policing

Most predictive policing algorithms rely on historical crime data; the same data produced by decades of blatantly discriminatory enforcement practices. Because Black neighborhoods have long been subject to greater surveillance, stops, and arrests, these patterns get baked into the training data for AI systems.

- The Brennan Center for Justice explains that historical arrest data reflects “systemic inequities,” with misdemeanor arrests concentrated in Black and Latino neighborhoods that already see disproportionate policing. Feeding this biased data into AI tools can cause systems to “predict” higher crime levels and send more police to these communities, reinforcing a feedback loop of surveillance and arrests. The Dangers of Unregulated AI in Policing | Brennan Center for Justice

- Research summarized by the Greenlining Institute shows that when an algorithm like PredPol was applied to Oakland, it twice as often targeted predominantly Black neighborhoods as White ones, despite similar crime rates. This occurs because AI models trained on biased arrest data interpret high policing activity as high crime. https://greenlining.org/wp-content/uploads/2021/04/Greenlining-Institute-Algorithmic-Bias-Explained-Report-Feb-2021.pdf

- Broad legal analyses note that AI uses historical police activity—not race directly—but still yields discriminatory outcomes because the “historic racial prejudice embedded in [the data]” shapes predictions. https://www.criminallegalnews.org/news/2021/oct/15/real-minority-report-predictive-policing-algorithms-reflect-racial-bias-present-corrupted-historic-databases/

This pattern illustrates the well-known principle of “garbage in, garbage out”: when biased human decisions generate the data used to train models, the models will reproduce and often magnify that bias.

2. Feedback Loops: Predictive Policing and Disproportionate Enforcement

Predictive policing tools seek to allocate and send officers to “high-risk” areas based on algorithmic forecasts. But because many Black communities have been historically over-policed, these forecasts can simply replicate where police are already most present—not where crime is actually highest.

- Investigations by civil liberties groups, including the Electronic Frontier Foundation (EFF), cite independent analyses finding that predictive policing software such as PredPol directs officers to predominantly Black neighborhoods at much higher rates than comparable White neighborhoods. https://davisvanguard.org/2025/03/report-claims-predictive-policing-targets-marginalized/

- Policy briefs by organizations like the NAACP explicitly warn that predictive policing may increase racial bias instead of reducing crime, as biased data and lack of transparency lead to disproportionate stops, surveillance, and arrests in Black communities. https://naacp.org/resources/artificial-intelligence-predictive-policing-issue-brief

Academic work also links racial disparities in policing directly to these algorithmic systems. In Chicago, for example, algorithmic prediction tools have been shown to disproportionately assign higher risk scores to Black men—despite criticism that the measures are deeply connected to existing enforcement inequities. https://link.springer.com/article/10.1007/s11229-023-04189-0

These patterns deepen the already huge distrust between Black communities and law enforcement and undermine fairness in policing.

3. Facial Recognition and Surveillance: Additional AI Bias

Beyond predictive policing, other AI tools such as facial recognition systems are widely documented to perform worse on Black faces than White faces—leading to higher false positives and unjust police actions.

- Reports and investigations show that many facial recognition systems have higher error rates for Black individuals, meaning Black people are more likely to be incorrectly identified as suspects. Although some studies are based outside the U.S., the broader pattern of bias in these technologies is well documented and highly relevant to U.S. law enforcement use. https://stateofblackamerica.org/index.php/authors-essays/21st-century-policing-tools-risk-entrenching-historical-biases

- Civil rights groups have also identified how disproportionate inclusion of Black men’s mugshots and arrest photos in police databases increases the risk of misidentification and wrongful targeting. (State of Black America)

This combination of biased predictive models and flawed recognition tools can normalize heavy surveillance of Black communities and legitimize discriminatory stops or arrests.

4. Transparency, Accountability, and Civil Rights Concerns

AI policing programs often lack transparency, making it difficult for communities to challenge or even understand how decisions about policing are made.

- Many algorithms used by law enforcement are proprietary (“black boxes”), with police departments unable or unwilling to disclose how predictions are generated. Independent oversight and audits are rare. https://naacp.org/resources/artificial-intelligence-predictive-policing-issue-brief

- Civil rights organizations like the NAACP and NAACP Legal Defense Fund argue that absent clear safeguards, AI policing tools threaten civil liberties, equal protection, and community trust. They have called for stronger regulation, transparency requirements, and, in some cases, bans or moratoriums on their use. (NAACP)

5. Why This Matters: Structural Racism Meets New Technology

AI policing doesn’t function in a vacuum; it inherits the structural racism of the U.S. criminal justice system.

- Generations of over-policing Black neighborhoods, unequal enforcement of drug and quality-of-life laws, and racial disparities in arrests are reflected in the data systems that AI relies on. This means that even “neutral” algorithms can produce racially disparate outcomes. (Brennan Center for Justice)

- Critics argue that without intentional intervention—such as rigorous bias audits, publicly accessible methodologies, and legal oversight—these systems risk perpetuating historical injustices under the guise of technological progress.

AI Is Not Objective; and Policing Is Not Race-Neutral

AI systems promise objectivity, but in policing, they too often reproduce and magnify human prejudice. By training on biased data, lacking transparency, and being used without accountability, predictive policing and related AI tools have contributed to discriminatory enforcement practices that disproportionately affect Black communities in the United States.

Movement toward justice requires rejecting the myth that technology is inherently fair and instead demanding oversight, community involvement, and fundamental reforms in how law enforcement uses AI.

Leave a comment